Nonlinearity makes photonic neural networks smarter

Researchers in the Institute for Quantum Electronics have produced the core processing unit of a photonic neural network in which optical nonlinearity plays a key role in making the network more powerful.

Artificial intelligence (AI) based on neural networks aims to solve complex problems by emulating the human brain – and, much like the brain itself, it's full of surprises. It turns out, for example, that a very elegant way to create a neural network is to use random matrices. In other words, the links between the input and output of the network are assigned fixed and completely random weights. At the output, linear regression methods can then be used to train the network.

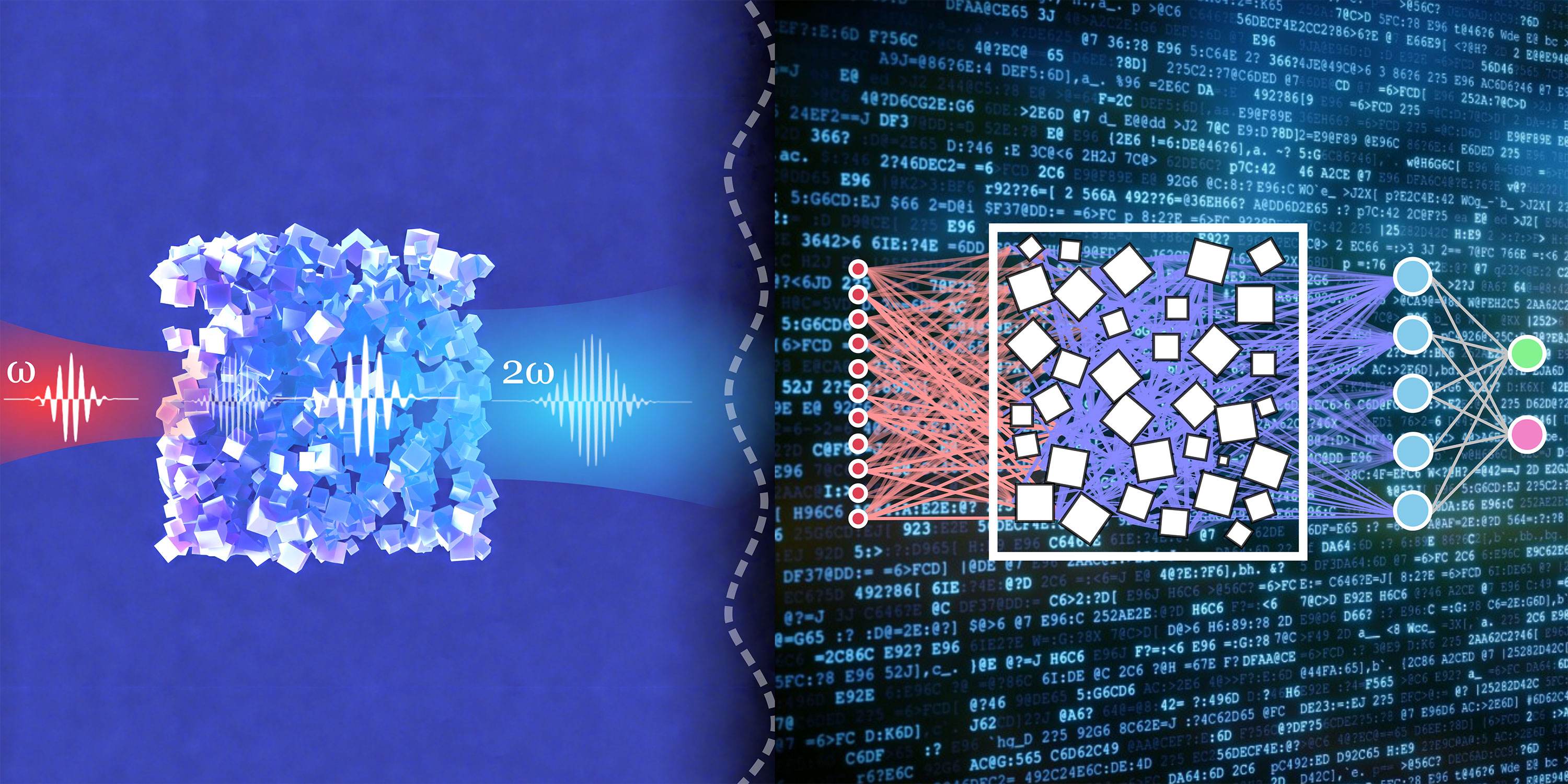

While this is a powerful method on paper, implementing it on a computer can be tricky because of the memory requirements for large random matrices. Also, power consumption and computing time are likely to become an issue with digital computers as neural networks become larger and more complex. In recent years, researchers have tried to overcome these problems by using the natural physical equivalent of a random matrix – a disordered optical material – and realise the necessary matrix multiplication through light scattering.

Such photonic neuromorphic computers promise to overcome the weaknesses of digital computers mentioned above, but they also have a major drawback: photon scattering is a linear process. This means that the activation functions of the network nodes, which determine how the inputs to a node result in an output, are also linear. This, in turn, implies that the resulting neural networks are essentially single-layer networks and therefore, in AI jargon, not particularly "expressive".

In a joint effort, a team of researchers led by Rachel Grange at the Institute for Quantum Electronics in Zurich and by Sylvain Gigan at the Laboratoire Kastler Brossel (LKB) in Paris, together with colleagues from Italy and China, showed that photonic neural networks can be made smarter by using a disordered material made of lots of tiny crystals that can double the frequency of incoming light in a nonlinear process. In their paper, recently published in Nature Computational Science, they demonstrate that their approach leads to a sizeable increase in performance over simple linear scattering.

Core processing unit made of disordered nanoparticles

The task of the ETH researchers was to produce and characterise the core processing unit of the photonic neural network, which was then integrated into an experimental setup at the LKB. "Our group provided the samples and our expertise on optical nonlinearity," says Alfonso Nardi, a postdoctoral researcher working with Rachel Grange. He and his colleagues used tiny crystals of lithium niobate (LiNbO3), chemically synthesized to obtain individual crystals with sizes between 100 and 400 nanometres. The suspension containing the crystals was deposited on a substrate; once the solvent evaporated, a solid slab with a thickness of five micrometres and containing randomly oriented nanocrystals was created.

Grange and her collaborators chose the material and particle size such that light scattering was maximised, to the point that the mean free path of photons in the slab was below one optical wavelength. At the same time, the lithium niobate crystals – which have a non-centrosymmetric crystal lattice and, therefore, a non-vanishing Chi-2 nonlinearity – can double the frequency of incoming light through the nonlinear process of second harmonic generation. As the crystals are randomly oriented, there's always global second harmonic emission regardless of the phase-matching conditions.

To feed data into the photonic network, the researchers at the LKB used a spatial light modulator that converts images or numerical values into optical phases. A pulsed laser at 800nm sends photons through the modulator, and the photons are then scattered multiple times inside the lithium niobate slab. Through second harmonic generation, photons at 400nm are created and also scattered. For both wavelengths, the multiple and phase-coherent scattering events lead to characteristic speckle patterns, which are separated by a dichroic mirror and then recorded on CCD cameras.

Superior performance thanks to nonlinearity

From the speckle patterns, the network could be trained using regression methods. To show the strength of their system, which effectively realised more than 27,000 input and 3,500 output nodes, the researchers applied it to a range of machine learning tasks from image recognition to graph classification. In one such task, the photonic neural network was trained to recognise sign language digits, in which the numbers from 0 to 9 are represented by different combinations of opened fingers. After the training, the researchers determined how many times the network got the right answer using either the linearly scattered photons or those resulting from the nonlinear second harmonic generation. The result was clear: while the linear neural network accurately recognised the digits in about 74% of cases on average, the nonlinear network achieved a hit rate close to 86%.

"This is a first important step towards establishing optical nonlinearity as a key factor for the future of photonic computing," says postdoctoral researcher Andrea Morandi. To further improve the energy efficiency of the photonic network, the researchers plan to use a continuous-wave rather than pulsed laser. This could be achieved, for example, by using an optical cavity or by engineering novel nonlinear materials with a higher second-harmonic yield.

Reference

Wang, H., Hu, J., Morandi, A. et al. Large-scale photonic computing with nonlinear disordered media. Nat. Comput. Sci. 4, 429-439 (2024). external page DOI:10.1038/s43588-024-00644-1